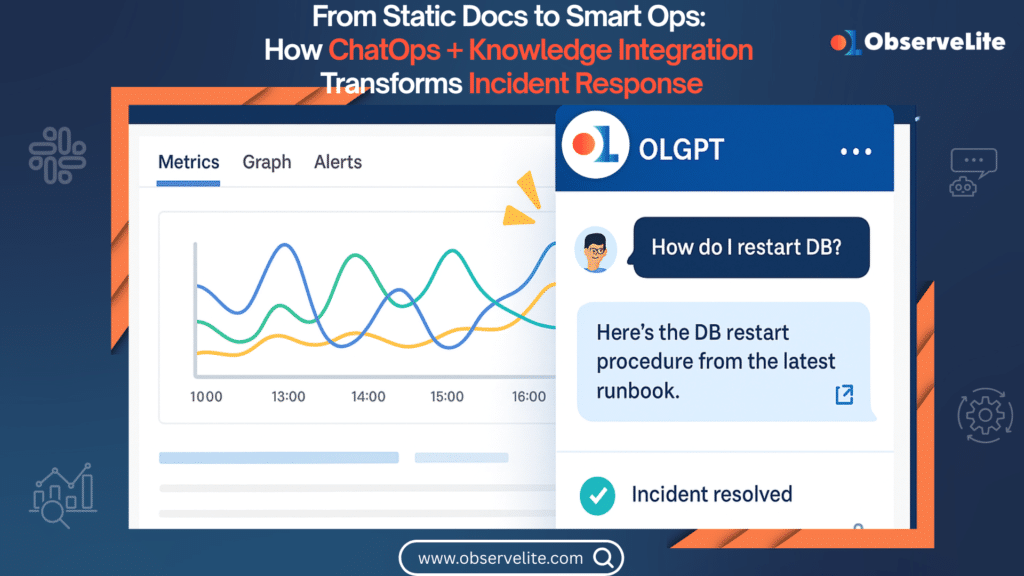

In fast-moving DevOps environments, every second of context switching costs – time, focus, and reliability. Knowledge-Base & ChatOps Integration bridges the gap between static documentation and real-time collaboration by embedding an intelligent chatbot directly into your team’s communication channels. By surfing in browser, books and incident histories on demand and even driving automated actions, this approach transforms your collective knowledge into an always-on, interactive service. Below we explore how integrated ChatOps (OLGPT) enhances incident response, reduces downtime, and fosters continuous improvement.

1. Unified Access to Institutional Knowledge

When production incidents strike, there is no time to click through multiple systems. A ChatOps bot that indexes your Confluence pages, Markdown repos, and ticketing history delivers the right answer in seconds:

- Cross-Repository Search

The bot maintains a real-time index of all internal documentation. Ask “How do I rotate our SSL cert?” and it returns the precise commands from your security runbook, complete with code snippets and links to the relevant policy. - Contextual Recall

Mention an incident ID or service name, and the bot stitches in the original postmortem, recent change logs, and any associated metrics graphs. No more guessing which document holds the answers.

By making every piece of institutional knowledge instantly queryable in natural language, teams spend less time hunting for details and more time resolving issues.

2. 24/7 Q&A and Guided Follow-Ups

Whether it’s the middle of the night or the edge of a holiday weekend, this ChatOps integration never sleeps:

- Immediate Troubleshooting Steps

On-call engineers can ask for “database restart procedure” or “rollback playbook” and get step-by-step instructions without paging teammates. This not only speeds up resolution but standardizes responses. - Interactive Prompts

After you execute a suggested command, the bot can automatically follow up: “Did that restore service health? Reply ‘yes’ or ‘no’.” If problems persist, it escalates to the next set of steps or creates a ticket in Jira.

These guided interactions flatten the on-call learning curve, enabling even junior engineers to handle complex incidents with confidence.

3. Incident History as a Living Resource

Every conversation and every resolution is a data point that fuels smarter operations:

- Conversation Logging & Tagging

All Q&A exchanges are archived and tagged by topic, severity, and outcome. When a similar incident recurs, the bot surfaces prior solutions and outlines what worked—saving precious minutes during critical moments. - Automated Runbook Suggestions

Post-incident, the bot analyzes chat transcripts and proposes edits to existing runbooks. It might flag missing steps, clarify ambiguous instructions, or recommend adding screenshots where confusion occurred.

Over time, your documentation evolves organically, shaped by real-world incidents rather than static policy updates—ensuring that your runbooks stay accurate and actionable.

4. One-Click Actions and Alert Context Enrichment

Integration with CI/CD and monitoring platforms collapses the entire alert-to-remediation cycle into a single chat thread:

- Alert Enrichment

When Prometheus or Datadog fires an alert, the bot pushes a message into Slack or Teams with key context: recent deployments, related incident IDs, and links to dashboards. No more frantic window-switching across consoles. - In-Chat Remediation

From the same thread, you can trigger a rollback, execute a health-check script, or open a critical ticket in ServiceNow—all via simple slash commands. Each action is tracked in the conversation log for audit purposes.

This seamless workflow reduces mean time to resolution (MTTR) by removing manual steps and keeping all activity in one place.

5. Security, Permissions, and Compliance

Not every user should see every document, so a robust ChatOps integration respects your organization’s access controls:

- Scoped Knowledge Views

The bot enforces role-based permissions: developers see code-related runbooks, SREs access infrastructure docs, and support teams get customer-facing procedures. Sensitive security playbooks remain locked down. - Audit Trails

Every query, every document fetch, and every remediation command is logged with timestamps, user IDs, and channels. This provides full traceability for postmortems and compliance audits.

By combining convenience with strict governance, you ensure that only authorized users can access confidential procedures or initiate high-impact actions.

6. Analytics and Continuous Improvement

Data-driven metrics turn everyday interactions into strategic insights:

- Usage Dashboards

Track which runbooks and commands are most frequently requested. Identify gaps in coverage where users repeatedly ask for the same information—and prioritize documentation efforts accordingly. - Feedback Mechanisms

Team members can rate the usefulness of each answer or flag outdated content. These signals feed back into a documentation backlog, ensuring the knowledge base remains fresh and relevant. - Performance Metrics

Measure the impact of ChatOps on MTTR, incident volume, and on-call workload. By monitoring these KPIs, leadership can quantify the ROI of your integration and make informed decisions about further investment.

Over time, this closed-loop system turns your ChatOps bot into an ever-smarter teammate, surfacing patterns, anticipating needs, and driving operational excellence.

Conclusion

Knowledge-Base & ChatOps Integration isn’t just about convenience—it’s a force multiplier for any DevOps organization. By unifying access to runbooks and wikis, enabling round-the-clock Q&A, automating post-incident updates, and embedding one-click actions, you drastically reduce context-switching and accelerate incident response. Coupled with rigorous permissioning and analytics, this approach transforms static documentation into a living, breathing system of insight. As a result, teams spend less time searching and more time innovating, keeping systems resilient, compliant, and always ready for what’s next.