Artificial intelligence is transforming how businesses operate, automate, and innovate. But as more organizations adopt AI, one issue is becoming impossible to ignore: data privacy.

Most AI models — especially large language models (LLMs) like those powering public tools — process information in shared, cloud-based environments. That means when your team feeds sensitive data into a public LLM, you lose control over where that data goes and how it’s stored.

This is where private AI for business becomes critical. It ensures your proprietary data stays secure, your compliance requirements are met, and your risk exposure stays minimal — all while leveraging powerful AI capabilities.

Below are 5 reasons why private AI for business — like OLGPT — outperforms public LLMs when it comes to privacy and security.

1. Public LLMs Come With Hidden Data Privacy Risks

The Hidden Risks of Public LLMs

Public LLMs are trained and hosted on shared infrastructure provided by third parties. When you use them, your prompts and inputs are typically processed in multi-tenant environments. Even if the provider claims not to “store” or “see” your data, the architecture is not under your control.

Here’s what that means in real terms:

According to the World Economic Forum’s “Artificial Intelligence and Cybersecurity: Balancing Risks and Rewards” report, public AI systems introduce significant cybersecurity exposures due to shared infrastructure and limited data‑control guarantees.

- Your inputs could be stored temporarily (or longer) for model training or logging

- There’s potential for data leakage via cached queries or accidental model memorization

- No guarantees where the data is processed — often across multiple jurisdictions

- You have zero visibility into what security controls are applied in transit or at rest

For highly regulated industries — like finance, healthcare, legal, or defense — this is an unacceptable risk.

2. Private AI Keeps All Data Within Your Control

What Makes Private AI different?

Unlike public LLMs, private AI models are deployed in isolated, secure environments — often on-premise or within your organization’s trusted cloud perimeter.

Let’s break down the critical differences:

| Feature | Public LLMs | Private AI (OLGPT) |

| Data Location | Processed on external servers | Stays in your infrastructure |

| Environment | Shared, multi-tenant | Isolated, private |

| Security Control | Limited/no visibility | Fully customizable |

| Compliance | Often non-compliant with GDPR, HIPAA, etc. | Aligns with internal compliance needs |

| Integration | External APIs only | Embedded into secure workflows |

Private AI offers enterprise-grade assurance — your data is never shared, leaked, or exposed to external vendors.

3. Real-World Scenarios Show Why Public LLMs Are Unsafe

Legal or Financial Use

Imagine your legal team uses a public LLM to summarize sensitive case files. That data could contain:

- Names of clients under NDA

- Legal arguments or IP

- Privileged communication

Even if anonymized, uploading that data to a public system puts it at uncontrolled risk.

Now imagine doing the same with OLGPT, your private AI for business models:

- The data never leaves your secure network

- All processing happens in a zero-trust environment

- You define who has access, how long logs are retained, and what security policies apply

That’s not just secure — that’s smart.

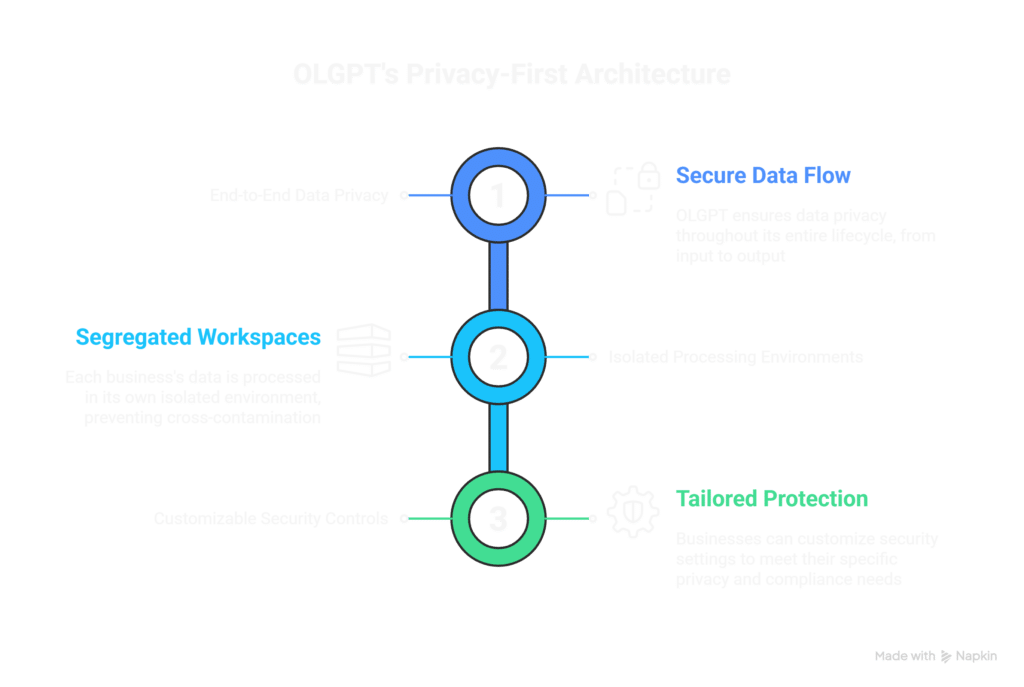

4. OLGPT Ensures End-to-End Privacy Through Secure Architecture

Inside OLGPT’s Privacy-First Architecture

OLGPT is designed from the ground up to give businesses full confidence that AI doesn’t come at the cost of privacy. Here’s how:

1. End-to-End Data Privacy

From input to output, OLGPT ensures that:

- No queries are stored outside your control

- Data is encrypted in transit and at rest

- No third-party vendor sees or uses your data for model training

Your business data remains your business.

2. Isolated Processing Environments

OLGPT runs in containerized, sandboxed environments — fully separated from external traffic or users. This prevents:

- Cross-session data contamination

- Accidental data leaks between teams or tenants

- External API snooping or injection threats

Whether deployed on-premise or in your private cloud, the AI works inside your perimeter — not someone else’s.

3. Customizable Security Controls

With OLGPT, your IT/security team controls:

- User access levels

- Audit logs of every prompt/response

- Retention policies

- Firewall rules and external API restrictions

This makes OLGPT ideal for regulated environments where auditability and data lineage are key.

5. Private AI Helps Businesses Stay Compliant and Protect Long-Term Trust

Public vs Private AI Models: Use Cases

| Industry Scenario | Public LLMs | OLGPT Private AI for business |

| Healthcare: Summarizing patient case histories or clinical notes | ❌ High risk of PHI exposure, violates HIPAA | ✅ Secure, on-prem or compliant environment ensures data safety |

| Fintech: Analyzing confidential financial reports or audit data | ❌ Exposes sensitive financial and regulatory data | ✅ Fully isolated and auditable, ideal for SOC 2 and PCI compliance |

| EdTech: Generating custom learning feedback using student data | ❌ Risk of violating student data privacy (FERPA) | ✅ Controlled access and logging, safe for educational records |

| Manufacturing: Optimizing production workflows using proprietary system logs | ❌ IP and operational data risks | ✅ Data remains within the trusted enterprise network |

The deeper the AI goes into your systems, the more you need privacy and control.

Why Data Privacy in AI Is Now a Business Priority

In 2025 and beyond, data privacy in AI isn’t just a technical issue — it’s a brand, legal, and business continuity issue.

Without proper safeguards, public LLM usage can lead to:

- Compliance violations (e.g., GDPR, HIPAA, SOC2)

- Loss of customer trust

- Internal data leaks that hurt your competitiveness

Private AI for business ensures you get the benefits of automation and intelligence — without sacrificing control.

Final Take: Keep Your Data Where It Belongs

Public LLMs might be convenient, but they come with invisible risks that can cost your business dearly.

By using OLGPT, the private AI for business, your organization gains:

- AI performance on par with public LLMs

- Full ownership and control of data

- Compliance-ready architecture

- Peace of mind that your sensitive info stays private

Intelligence should never come at the cost of privacy. With private AI for business, it doesn’t have to.

Interested in deploying private AI for your enterprise?

Get in touch to learn how OLGPT can be embedded securely into your environment — with your data, your rules.